- #Airflow tutorial how to#

- #Airflow tutorial install#

- #Airflow tutorial code#

- #Airflow tutorial download#

For example, the MySqlOperator creates a task to execute a SQL query and the BashOperator executes a bash script. As you can tell, the operators help you define tasks that follow a specific pattern. Some common operators are BashOperator, PythonOperator, MySqlOperator, S3FileTransformOperator.

#Airflow tutorial code#

Operators can be viewed as templates for predefined tasks because they encapsulate boilerplate code and abstract much of their logic. This means that each Task instance is a specific run for the given task. Whenever a new DAG run is initialized, all tasks are initialized as Task instances.

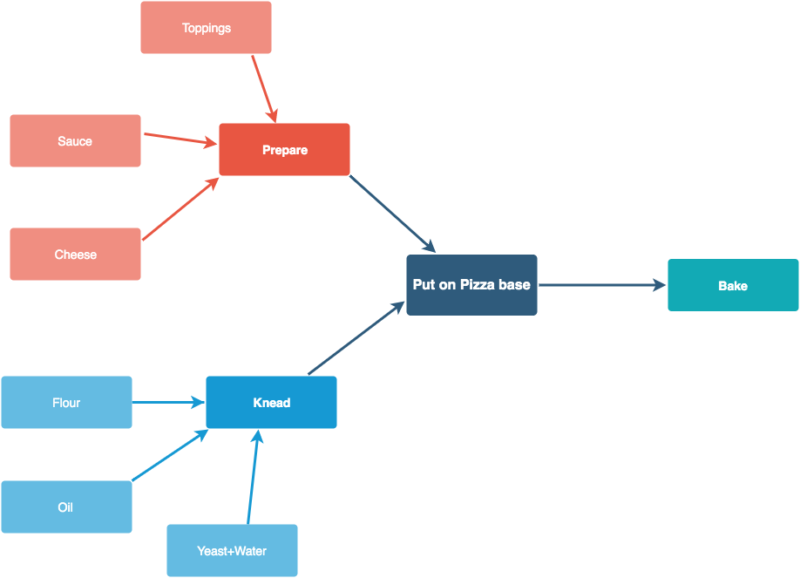

These dependencies express how tasks are related to each other and in which order they should be executed. Each task may have some upstream and downstream dependencies. TasksĮach node of the DAG represents a Task, meaning an individual piece of code. The retries argument ensures that it will be re-run once after a possible failure. In this example, we define that the DAG will start on and will be executed each day. The complete list of parameters can be found on the official docs. The default arguments are passed into all tasks and can be overridden on a per-task basis. The context manager accepts some global variables regarding the DAG and some default arguments.

#Airflow tutorial download#

You can download the official docker compose file from here:

#Airflow tutorial install#

The easiest way to install Airflow is using docker compose. This is typically a Postgres but other SQL databases are supported too. PostgreSQL: A database where all pipeline metadata is stored. Scheduler: The scheduler is responsible for executing different tasks at the correct time, re-running pipelines, backfilling data, ensuring tasks completion, etc. A few examples are LocalExecutor, SequentialExecutor, CeleryExecutor and KubernetesExecutor There are many different types that run pipelines locally, in a single machine, or in a distributed fashion. From there one can execute, and monitor pipelines, create connections with external systems, inspect their datasets, and many more.Įxecutor: Executors are the mechanism by which pipelines run. Webserver: Webserver is Airflow’s user interface (UI), which allows you to interact with it without the need for a CLI or an API. When installing Airflow in its default edition, you will see four different components. Train, validate, and deploy machine learning models Example use cases include:Įxtracting data from many sources, aggregating them, transforming them, and store in a data warehouse.Įxtract insights from data and display them in an analytics dashboard As a result, is an ideal solution for ETL and MLOps use cases.

What is Airflow?Īpache Airflow is a tool for authoring, scheduling, and monitoring pipelines. It also enables you to automatically re-run them after failure, manage their dependencies and monitor them using logs and dashboards.īefore we build the aforementioned pipeline, let’s understand the basic concepts of Apache Airflow. On the other hand, Airflow offers the ability to schedule and scale complex pipelines easily. Most importantly, they won’t allow you to scale effectively. How would you schedule and automate this workflow? Cron jobs are a simple solution but they come with many problems. Train a deep learning model with the downloaded images Read an image dataset from a cloud-based storage Imagine that you want to build a machine learning pipeline that consists of several steps such as:

#Airflow tutorial how to#

In this article, I will attempt to outline its main concepts and give you a clear understanding of when and how to use it. It has gained popularity, contary to similar solutions, due to its simplicity and extensibility. Apache Airflow has become the de facto library for pipeline orchestration in the Python ecosystem.

0 kommentar(er)

0 kommentar(er)